Is AI the New Phrenology?

Is AI the New Phrenology?

Is AI the New Phrenology? Why Realism Must Guide Our AI Journey

Written by Stuart McClure • Sep 18, 2025

Is AI the new Phrenology? Can Realism save us all?

Without a shadow of a doubt, AI spells an imminent existential threat to the knowledge workforce. The cost of knowledge and deep expertise has literally dropped to micro-pennies. But don’t let your cognitive biases throw the baby out with the bathwater. As operators and leaders, we can't afford to get swept up in the frenzy. We need to be discerning, critical, and frankly, a little skeptical.

As a serial entrepreneur, I've witnessed firsthand the transformative power of technology – both its potential and its pitfalls. From my early days building cybersecurity companies like Foundstone and Cylance to my current focus on AI-driven ventures like Wethos AI, NumberOne AI, and Qwiet AI, I've learned that innovation isn't just about chasing the latest trend; it's about solving real-world problems with the reality of what is available (and maybe breaking some glass along the way). But the AI hype is undeniable, even I get sucked up into it from time to time despite living (painfully) through the dot-com bubble, the 9/11 venture pause, and the housing crisis of 2008, Covid-19 and many more… Anyone remember blockchain?

The real challenge lies in separating the genuine breakthroughs from the inflated expectations. I've seen how easily we can get caught up in the excitement, extrapolating from impressive demos to unrealistic visions of the future. My experience (and yes, now my grey hairs) has taught me the importance of a pragmatic, disciplined approach to AI adoption, one that focuses on strategic alignment, realistic expectations, and rigorous evaluation. Excitement builds, everyone jumps on the bandwagon, and then… well, sometimes things don't quite pan out as promised. We need to be critical, discerning, and willing to challenge the hype.

“In an emergency … BREAK THE GLASS!”

(any time I can get a Die Hard movie reference… I don’t want to, I gotta)

One of the powers of the human brain (over any AI today) is the power of abductive reasoning and the ability to reason based on the experiences we have in the world today, along with thinking backwards, forwards and in parallel threads of potential outcomes (at lightning speed and energy expense). So let’s go in the wayback machine for just a minute.

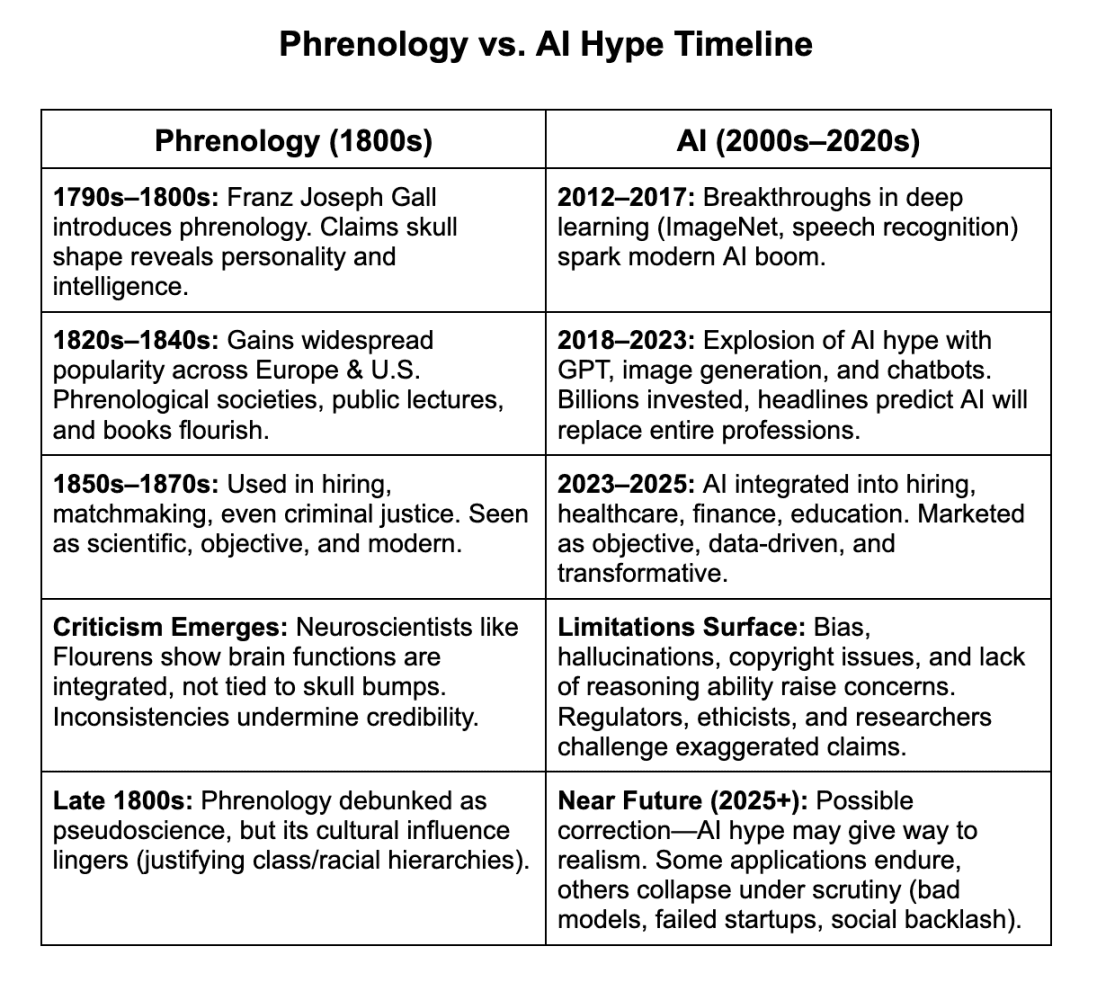

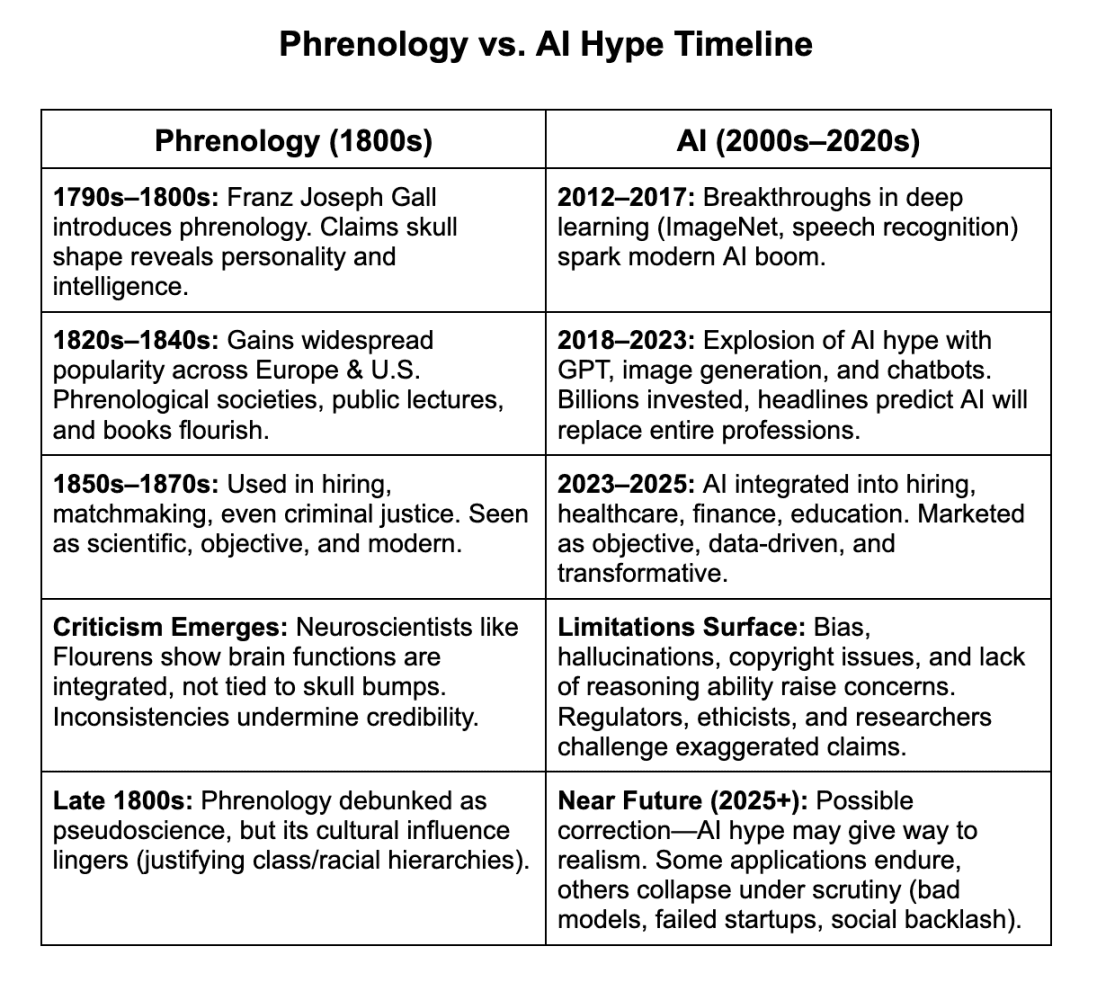

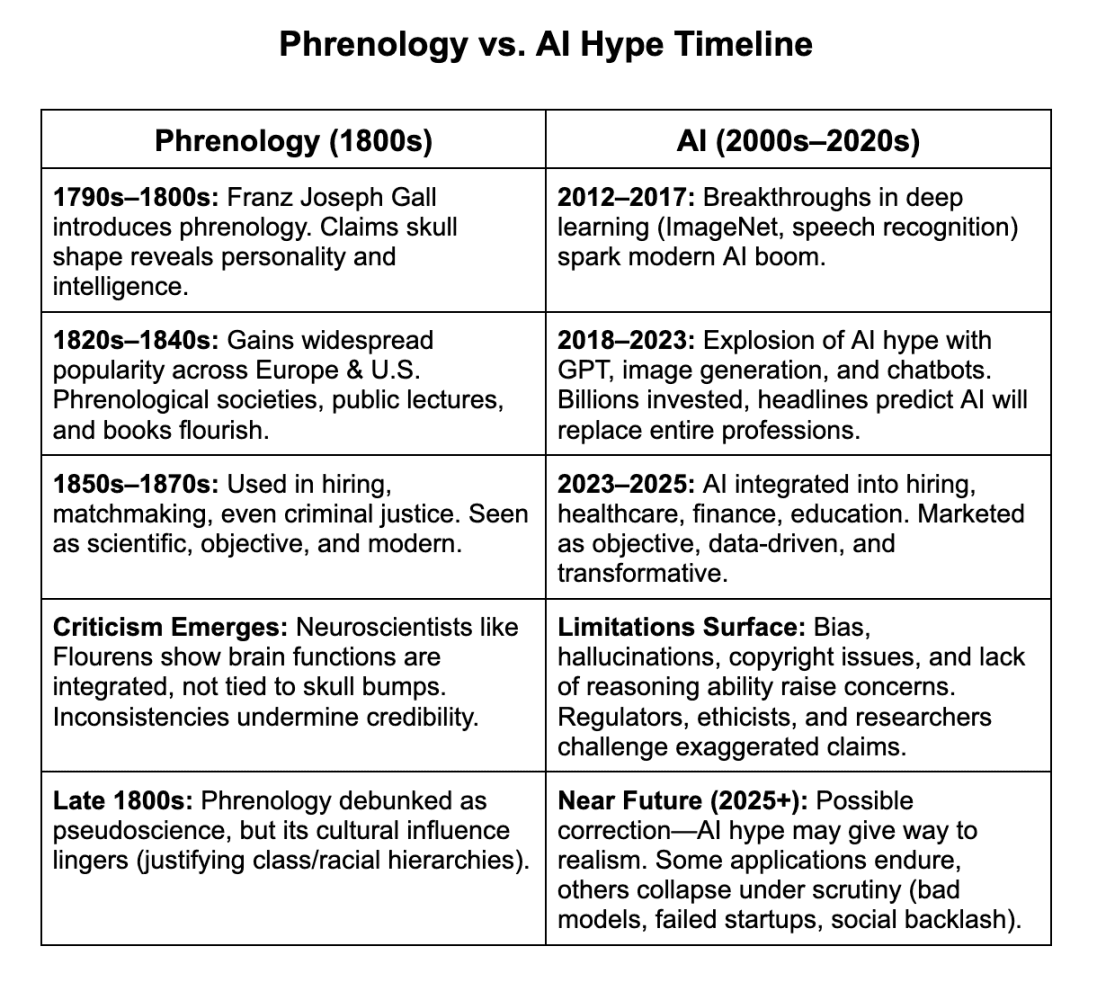

Imagine yourself in the late 18th and early 19th century, where the dominant belief was that the shape of a person’s skull reflected their personality, intelligence, morality, and mental faculties. This phenomenon, called Phrenology, was a hype that lasted for decades, fueled by cognitive biases and the veneer of science.

Franz Joseph Gall, a medical doctor, physician and anatomist, began propagating this theory and achieved celebrity status, and built a following that reinforced his authority. Gall’s framework conveniently aligned with the prevalent prejudices of the time, e.g., class distinctions, gender stereotypes, and later, racial hierarchies. This alignment created a reinforcing loop: elites wanted to believe it, and Gall gained validation from their acceptance. Later discoveries about the localization of brain functions (e.g., areas of speech) showed that phrenology’s maps were overly simplistic and wrong. Even when cracks began to show in his theory, Gall had career, reputation, and financial incentives to continue promoting phrenology.

Like phrenology, AI wears the cloak of science and math. Outputs feel “objective” because they come from data and algorithms, but can mask deep biases. Like phrenology, AI reflects the human tendency to confuse scientific-sounding methods with scientific truth.

The Cognitive Bias is strong with this one…

With AI, we're seeing the same kind of inflated expectations. We see a cool demo of an AI generating realistic images or writing human-like text, and suddenly we think it's ready to take over the world. That's the availability heuristic at play, we overestimate the likelihood of something happening based on how easily examples come to mind.

Then there's confirmation bias. We tend to gravitate towards information that confirms our existing beliefs. If we're already excited about AI, we'll focus on the positive stories and ignore the potential downsides.

And let's not forget the anchoring effect. When someone like Elon Musk predicts AGI by 2029, and that becomes the anchor for everyone's expectations, even if it's completely arbitrary. We get fixated on that date, and it distorts our planning and investment decisions.

Finally, there's optimism bias – the tendency to overestimate the likelihood of positive outcomes. We want to believe AI will solve all our problems, but it's not a magic bullet. It's a powerful tool, but it requires careful planning, implementation, and ongoing evaluation.

Quick Guidance

So, what's the solution? How do we navigate this AI hype cycle and make smart decisions for our businesses? It comes down to three key things:

Strategic Alignment: Don't just adopt AI for the sake of it. Figure out where it can actually add value to your business, and plug it into real world existing workflows. Align your AI initiatives with your core strategic objectives.

Realistic Expectations: AI is not going to transform your business overnight. It's a journey, not a destination. Be prepared for setbacks, challenges, and a learning curve.

Disciplined Evaluation: Don't believe the hype. Rigorously evaluate AI solutions based on their actual performance, not just marketing promises. Measure the ROI and be willing to pivot if something isn't working.

At Wethos AI, we help companies navigate these AI workforce complexities. We provide a framework for disciplined AI adoption, helping you identify the right problems to solve, build effective teams, and measure the impact of your AI initiatives. We're not about the hype; we're about real-world results.

So, if you're ready to move beyond the AI hype and develop a pragmatic strategy for your business, let's talk.

Is AI the New Phrenology? Why Realism Must Guide Our AI Journey

Written by Stuart McClure • Sep 18, 2025

Is AI the new Phrenology? Can Realism save us all?

Without a shadow of a doubt, AI spells an imminent existential threat to the knowledge workforce. The cost of knowledge and deep expertise has literally dropped to micro-pennies. But don’t let your cognitive biases throw the baby out with the bathwater. As operators and leaders, we can't afford to get swept up in the frenzy. We need to be discerning, critical, and frankly, a little skeptical.

As a serial entrepreneur, I've witnessed firsthand the transformative power of technology – both its potential and its pitfalls. From my early days building cybersecurity companies like Foundstone and Cylance to my current focus on AI-driven ventures like Wethos AI, NumberOne AI, and Qwiet AI, I've learned that innovation isn't just about chasing the latest trend; it's about solving real-world problems with the reality of what is available (and maybe breaking some glass along the way). But the AI hype is undeniable, even I get sucked up into it from time to time despite living (painfully) through the dot-com bubble, the 9/11 venture pause, and the housing crisis of 2008, Covid-19 and many more… Anyone remember blockchain?

The real challenge lies in separating the genuine breakthroughs from the inflated expectations. I've seen how easily we can get caught up in the excitement, extrapolating from impressive demos to unrealistic visions of the future. My experience (and yes, now my grey hairs) has taught me the importance of a pragmatic, disciplined approach to AI adoption, one that focuses on strategic alignment, realistic expectations, and rigorous evaluation. Excitement builds, everyone jumps on the bandwagon, and then… well, sometimes things don't quite pan out as promised. We need to be critical, discerning, and willing to challenge the hype.

“In an emergency … BREAK THE GLASS!”

(any time I can get a Die Hard movie reference… I don’t want to, I gotta)

One of the powers of the human brain (over any AI today) is the power of abductive reasoning and the ability to reason based on the experiences we have in the world today, along with thinking backwards, forwards and in parallel threads of potential outcomes (at lightning speed and energy expense). So let’s go in the wayback machine for just a minute.

Imagine yourself in the late 18th and early 19th century, where the dominant belief was that the shape of a person’s skull reflected their personality, intelligence, morality, and mental faculties. This phenomenon, called Phrenology, was a hype that lasted for decades, fueled by cognitive biases and the veneer of science.

Franz Joseph Gall, a medical doctor, physician and anatomist, began propagating this theory and achieved celebrity status, and built a following that reinforced his authority. Gall’s framework conveniently aligned with the prevalent prejudices of the time, e.g., class distinctions, gender stereotypes, and later, racial hierarchies. This alignment created a reinforcing loop: elites wanted to believe it, and Gall gained validation from their acceptance. Later discoveries about the localization of brain functions (e.g., areas of speech) showed that phrenology’s maps were overly simplistic and wrong. Even when cracks began to show in his theory, Gall had career, reputation, and financial incentives to continue promoting phrenology.

Like phrenology, AI wears the cloak of science and math. Outputs feel “objective” because they come from data and algorithms, but can mask deep biases. Like phrenology, AI reflects the human tendency to confuse scientific-sounding methods with scientific truth.

The Cognitive Bias is strong with this one…

With AI, we're seeing the same kind of inflated expectations. We see a cool demo of an AI generating realistic images or writing human-like text, and suddenly we think it's ready to take over the world. That's the availability heuristic at play, we overestimate the likelihood of something happening based on how easily examples come to mind.

Then there's confirmation bias. We tend to gravitate towards information that confirms our existing beliefs. If we're already excited about AI, we'll focus on the positive stories and ignore the potential downsides.

And let's not forget the anchoring effect. When someone like Elon Musk predicts AGI by 2029, and that becomes the anchor for everyone's expectations, even if it's completely arbitrary. We get fixated on that date, and it distorts our planning and investment decisions.

Finally, there's optimism bias – the tendency to overestimate the likelihood of positive outcomes. We want to believe AI will solve all our problems, but it's not a magic bullet. It's a powerful tool, but it requires careful planning, implementation, and ongoing evaluation.

Quick Guidance

So, what's the solution? How do we navigate this AI hype cycle and make smart decisions for our businesses? It comes down to three key things:

Strategic Alignment: Don't just adopt AI for the sake of it. Figure out where it can actually add value to your business, and plug it into real world existing workflows. Align your AI initiatives with your core strategic objectives.

Realistic Expectations: AI is not going to transform your business overnight. It's a journey, not a destination. Be prepared for setbacks, challenges, and a learning curve.

Disciplined Evaluation: Don't believe the hype. Rigorously evaluate AI solutions based on their actual performance, not just marketing promises. Measure the ROI and be willing to pivot if something isn't working.

At Wethos AI, we help companies navigate these AI workforce complexities. We provide a framework for disciplined AI adoption, helping you identify the right problems to solve, build effective teams, and measure the impact of your AI initiatives. We're not about the hype; we're about real-world results.

So, if you're ready to move beyond the AI hype and develop a pragmatic strategy for your business, let's talk.

Is AI the New Phrenology? Why Realism Must Guide Our AI Journey

Written by Stuart McClure • Sep 18, 2025

Is AI the new Phrenology? Can Realism save us all?

Without a shadow of a doubt, AI spells an imminent existential threat to the knowledge workforce. The cost of knowledge and deep expertise has literally dropped to micro-pennies. But don’t let your cognitive biases throw the baby out with the bathwater. As operators and leaders, we can't afford to get swept up in the frenzy. We need to be discerning, critical, and frankly, a little skeptical.

As a serial entrepreneur, I've witnessed firsthand the transformative power of technology – both its potential and its pitfalls. From my early days building cybersecurity companies like Foundstone and Cylance to my current focus on AI-driven ventures like Wethos AI, NumberOne AI, and Qwiet AI, I've learned that innovation isn't just about chasing the latest trend; it's about solving real-world problems with the reality of what is available (and maybe breaking some glass along the way). But the AI hype is undeniable, even I get sucked up into it from time to time despite living (painfully) through the dot-com bubble, the 9/11 venture pause, and the housing crisis of 2008, Covid-19 and many more… Anyone remember blockchain?

The real challenge lies in separating the genuine breakthroughs from the inflated expectations. I've seen how easily we can get caught up in the excitement, extrapolating from impressive demos to unrealistic visions of the future. My experience (and yes, now my grey hairs) has taught me the importance of a pragmatic, disciplined approach to AI adoption, one that focuses on strategic alignment, realistic expectations, and rigorous evaluation. Excitement builds, everyone jumps on the bandwagon, and then… well, sometimes things don't quite pan out as promised. We need to be critical, discerning, and willing to challenge the hype.

“In an emergency … BREAK THE GLASS!”

(any time I can get a Die Hard movie reference… I don’t want to, I gotta)

One of the powers of the human brain (over any AI today) is the power of abductive reasoning and the ability to reason based on the experiences we have in the world today, along with thinking backwards, forwards and in parallel threads of potential outcomes (at lightning speed and energy expense). So let’s go in the wayback machine for just a minute.

Imagine yourself in the late 18th and early 19th century, where the dominant belief was that the shape of a person’s skull reflected their personality, intelligence, morality, and mental faculties. This phenomenon, called Phrenology, was a hype that lasted for decades, fueled by cognitive biases and the veneer of science.

Franz Joseph Gall, a medical doctor, physician and anatomist, began propagating this theory and achieved celebrity status, and built a following that reinforced his authority. Gall’s framework conveniently aligned with the prevalent prejudices of the time, e.g., class distinctions, gender stereotypes, and later, racial hierarchies. This alignment created a reinforcing loop: elites wanted to believe it, and Gall gained validation from their acceptance. Later discoveries about the localization of brain functions (e.g., areas of speech) showed that phrenology’s maps were overly simplistic and wrong. Even when cracks began to show in his theory, Gall had career, reputation, and financial incentives to continue promoting phrenology.

Like phrenology, AI wears the cloak of science and math. Outputs feel “objective” because they come from data and algorithms, but can mask deep biases. Like phrenology, AI reflects the human tendency to confuse scientific-sounding methods with scientific truth.

The Cognitive Bias is strong with this one…

With AI, we're seeing the same kind of inflated expectations. We see a cool demo of an AI generating realistic images or writing human-like text, and suddenly we think it's ready to take over the world. That's the availability heuristic at play, we overestimate the likelihood of something happening based on how easily examples come to mind.

Then there's confirmation bias. We tend to gravitate towards information that confirms our existing beliefs. If we're already excited about AI, we'll focus on the positive stories and ignore the potential downsides.

And let's not forget the anchoring effect. When someone like Elon Musk predicts AGI by 2029, and that becomes the anchor for everyone's expectations, even if it's completely arbitrary. We get fixated on that date, and it distorts our planning and investment decisions.

Finally, there's optimism bias – the tendency to overestimate the likelihood of positive outcomes. We want to believe AI will solve all our problems, but it's not a magic bullet. It's a powerful tool, but it requires careful planning, implementation, and ongoing evaluation.

Quick Guidance

So, what's the solution? How do we navigate this AI hype cycle and make smart decisions for our businesses? It comes down to three key things:

Strategic Alignment: Don't just adopt AI for the sake of it. Figure out where it can actually add value to your business, and plug it into real world existing workflows. Align your AI initiatives with your core strategic objectives.

Realistic Expectations: AI is not going to transform your business overnight. It's a journey, not a destination. Be prepared for setbacks, challenges, and a learning curve.

Disciplined Evaluation: Don't believe the hype. Rigorously evaluate AI solutions based on their actual performance, not just marketing promises. Measure the ROI and be willing to pivot if something isn't working.

At Wethos AI, we help companies navigate these AI workforce complexities. We provide a framework for disciplined AI adoption, helping you identify the right problems to solve, build effective teams, and measure the impact of your AI initiatives. We're not about the hype; we're about real-world results.

So, if you're ready to move beyond the AI hype and develop a pragmatic strategy for your business, let's talk.